Clinical and Technical Aspects in Free Cortisol Measurement

Article information

Abstract

Accurate measurement of cortisol is critical in adrenal insufficiency as it reduces the risk associated with misdiagnosis and supports the optimization of stress dose. Comprehensive assays have been developed to determine the levels of bioactive free cortisol and their clinical and analytical efficacies have been extensively discussed because the level of total cortisol is affected by changes in the structure or circulating levels of corticoid-binding globulin and albumin, which are the main reservoirs of cortisol in the human body. Antibody-based immunoassays are routinely used in clinical laboratories; however, the lack of molecular specificity in cortisol assessment limits their applicability to characterize adrenocortical function. Improved specificity and sensitivity can be achieved by mass spectrometry coupled with chromatographic separation methods, which is a cutting-edge technology to measure individual as well as a panel of steroids in a single analytical run. The purpose of this review is to introduce recent advances in free cortisol measurement from the perspectives of clinical specimens and issues associated with prospective analytical technologies.

INTRODUCTION

The adrenal gland consists of the mesodermal cortex and neuroectodermal medulla, which produce different signaling hormones [1,2]. In contrast to medullary catecholamines, which instantly respond to biochemical stress, the secretion of adrenal steroids, including mineralocorticoids and glucocorticoids, controls prolonged responses in the cortex [3]. Cortisol, the major glucocorticoid, is synthesized in the zona fasciculata of the human adrenal cortex, and its biological concentration reflects acute, chronic, and diurnal changes in physiological and psychological events [4,5].

In addition to its biological role as a stress mediator, homeostatic regulation of cortisol in the hypothalamus–pituitary–adrenal (HPA) axis maintains its levels within the physiological range, and alteration of this dynamic equilibrium may result in functional changes in cells and organs during diseased conditions [6,7]. Optimization of the stress dose in managing patients with glucocorticoid-induced adrenal insufficiency during glucocorticoid treatment and after withdrawal is necessary based on the rate of endogenous cortisol production in unstressed conditions [8]. However, whether and when the blood concentrations of cortisol return to physiological levels has not yet been established in critical illness although dynamic alterations in the blood levels of adrenocorticotropic hormone (ACTH), cortisol, and cortisol-binding globulin (CBG) were described in the acute and chronic phases [9].

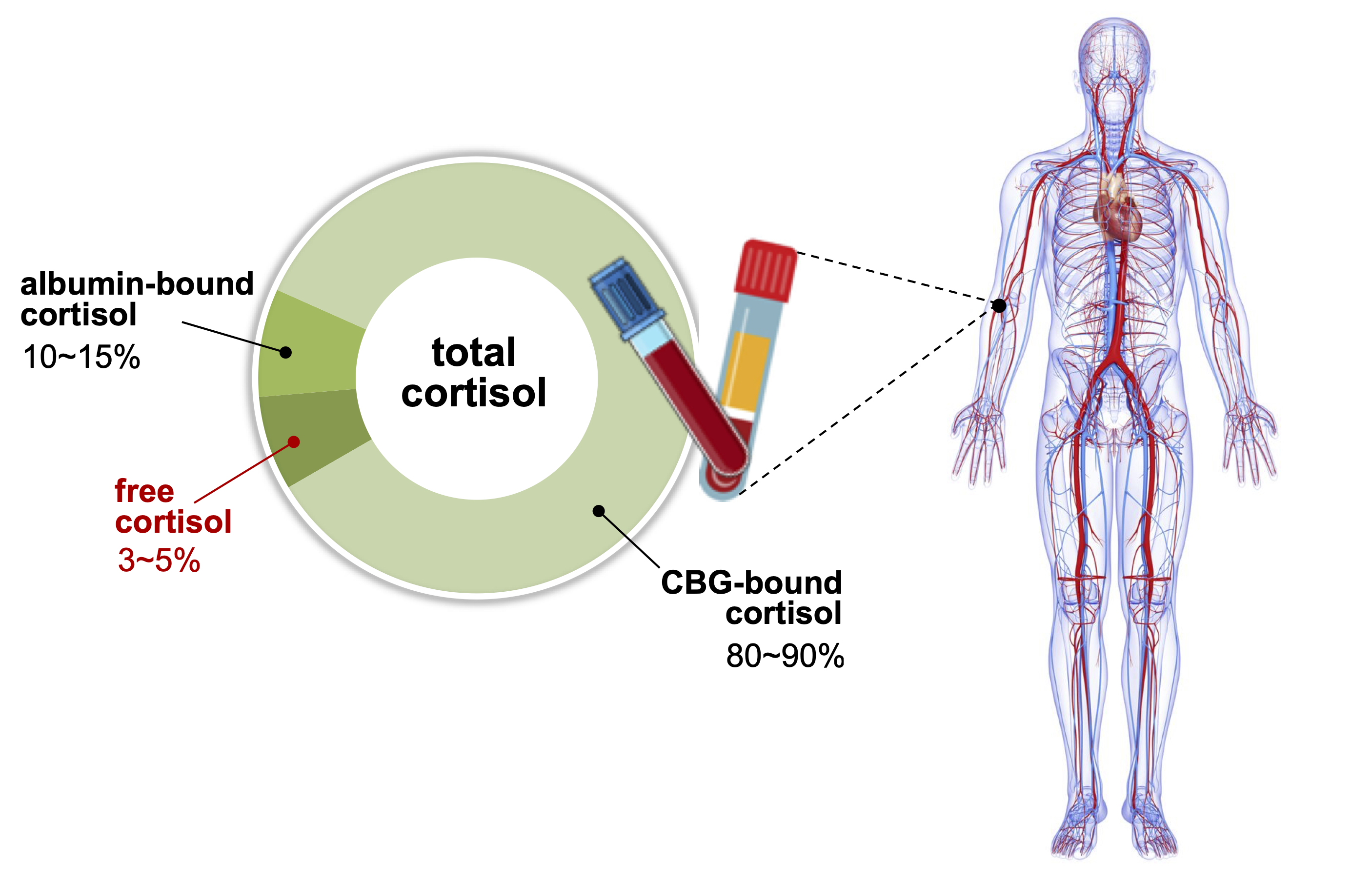

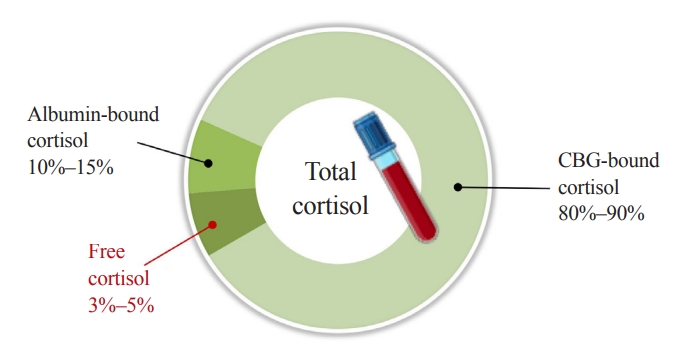

Comprehensive evaluation of cortisol is a prerequisite in diagnosing adrenal disorders and in ensuring optimal therapeutic effects of glucocorticoid replacement; however, clinical laboratories provide limited information on cortisol concentration in the blood [10,11]. Blood cortisol circulates in bound forms with CBG and albumin, which prevents glucocorticoids from penetrating the membrane of target cells. Although CBG plays critical roles in regulating the bioavailability and metabolic clearance of glucocorticoids, only 3% to 5% of total blood cortisol exists in its bioactive form as unbound free cortisol (Fig. 1). As enzyme-linked immunoassays are routinely used in laboratory medicine, free cortisol has not been properly focused upon in clinical practice. Direct measurement of free cortisol has been applied with additional sample preparation techniques; however, these methods may result in inaccurate quantification [10]. This review introduces and discusses the current status of immune-affinity-based technical developments in the measurement of free cortisol in clinical laboratories and the clinical significance of free cortisol, followed by a prospect on addressing technical issues using immunoassay and mass spectrometry.

Distribution of cortisol in human blood. Corticoid-binding globulin (CBG) and albumin are main reservoirs of cortisol, binding 80%–90% and 10%–15% of total cortisol, respectively. Approximately 3%–5% of total cortisol is present as biologically active cortisol. States of altered CBG and albumin concentrations may lead to misrepresentation of cortisol action based on total cortisol levels.

DYNAMIC EQUILIBRIUM OF CORTISOL

Bound and free cortisol in the blood remain in a dynamic equilibrium. Owing to its sensitivity to temperature, CBG releases cortisol in response to fever and external heat. The binding affinity of cortisol to CBG declines approximately 16-fold with the increase in temperature from 35°C to 42°C [12,13]. Systemic inflammation-driven proteolysis can alter CBG and albumin levels [14]. Cleavage of human CBG by neutrophil elastase results in a conformational change and substantial loss of cortisol binding affinity of CBG, thereby, regulating cortisol bioavailability [15]. Prereceptor regulation of cortisol involves affinity of binding with CBG and cortisol–cortisone interconversion catalyzed by 11β-hydroxysteroid dehydrogenase (11β-HSD); both these mechanisms affect the bioavailability of cortisol for binding to the glucocorticoid receptor [16]. The 11β-HSD modulates cortisol bioavailability through activation or inactivation at both systemic and localized tissue levels. Besides physiological conditions, medication can also affect circulating CBG levels by directly regulating its production and clearance.

Estrogens accelerate hepatic CBG production and cortisol level is also frequently assessed in adrenocortical carcinomas, treated with mitotane, an adrenolytic drug, as an estrogen-like effect [17]. In addition, CBG levels increased by oral contraceptive drugs lead to the overestimation of cortisol action based on total cortisol levels and underdiagnosis of adrenal insufficiency [18]. Acute decreases in CBG levels [15] and hypoproteinemia [19] have been observed in sepsis and acute illness, while patients had subnormal total cortisol levels in their sera even though their adrenal function was normal. Therefore, the measurement of free cortisol, rather than total cortisol, is recommended for better classification of hypoadrenal patients and may help prevent unnecessary glucocorticoid therapy [19,20]. Some SERPINA6 mutations show low or no circulating CBG and some polymorphisms only affect the cortisol-binding site structurally, with normal levels of CBG. These patients commonly present with low or undetectable total serum cortisol with normal ACTH levels, whereas free cortisol levels in serum and saliva are consistently normal [16].

SERUM SAMPLING WITH PRETREATMENT METHODS

Accurate measurement of HPA function is a key factor in supporting clinical decisions regarding the controversies associated with the diagnosis and treatment of hypoadrenalism [6]. An inadequate stress response is demonstrated by a subnormal adrenal stimulation; however, its diagnosis is based on total serum cortisol levels [21]. Reduced cortisol breakdown, which is related to the suppression of cortisol-metabolizing enzymes, is associated with critical illness and results in hypercortisolemia [22]. Sepsis and critical illness show decreased levels of CBG and assays for free cortisol might underestimate the levels in febrile patients as measurement is usually done at 37°C, which is considered not to be the effect of increased body temperature on the binding equilibrium [12,13].

Free cortisol levels in serum have been directly measured after removing bound fractions with ultrafiltration or equilibrium dialysis, which show acceptable inter-assay coefficients of variation <10% [23]. However, labor-intensive procedures may result in uncertainty in quantitative outcomes, which should be addressed by both technical standardization and quality control in measuring free cortisol levels in serum. Free cortisol can also be calculated based on both total cortisol and CBG levels, and the free cortisol index (FCI) shows a good correlation with free cortisol levels in serum measured using a steady-state gel-filtration method [24]. FCI could be a better tool in evaluating the HPA status than total cortisol levels in serum, in conditions where CBG changes significantly under acute-phase stress [25]. However, an accurate quantification of the levels of CBG is necessary as its tertiary structure leads to individual variations with different binding affinities for cortisol [26].

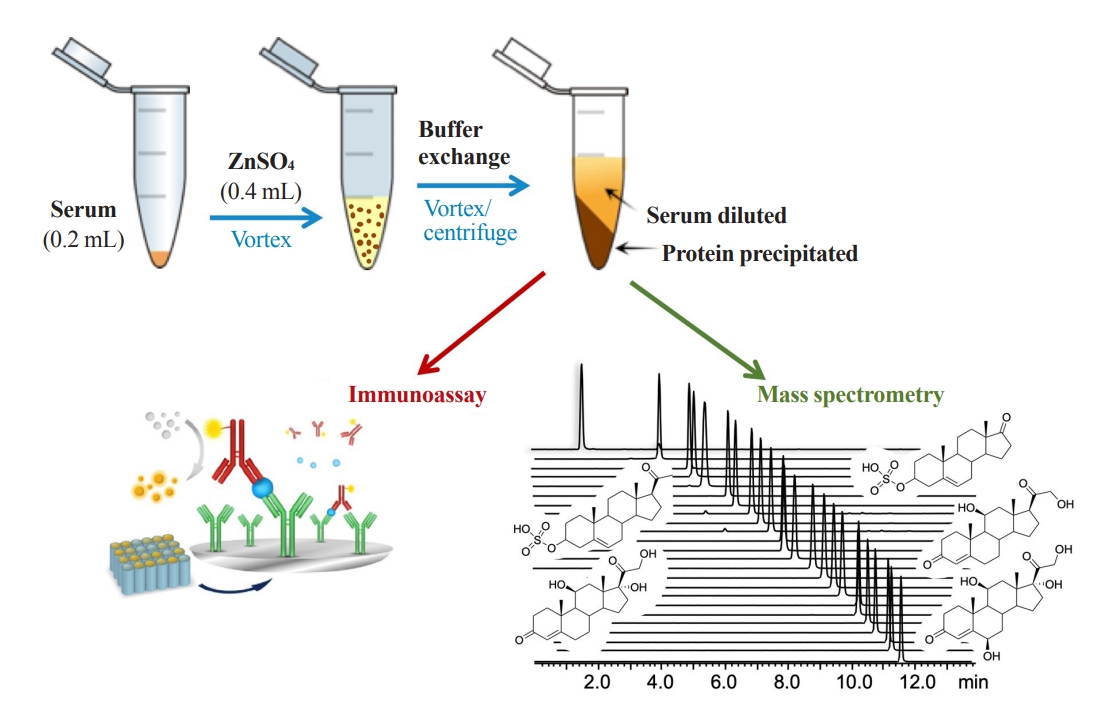

Zinc sulfate is used to precipitate large proteins, such as immunoglobulins and albumin, at 2:1 or higher ratios (v/v) with serum. Acetonitrile or methanol then precipitates relatively smaller proteins and the remaining zinc sulfate [27]; however, organic solvents are not directly compatible with immunoassay. Unbound cortisol levels in serum can be determined from deproteinized serum samples with zinc sulfate/methanol, with or without further purification steps prior to liquid chromatography-mass spectrometry (LC-MS) analysis, which can be implemented in routine clinical laboratories [28,29]. As zinc ions and acetonitrile/methanol denature antibodies, these methods cannot be used in immunoassays; however, precipitation of proteins with zinc sulfate followed by further precipitation of abundant remaining zinc ions may be suitable for mass spectrometry and immunoassay to measure free cortisol (Fig. 2).

A schematic diagram of free cortisol analysis in serum. Zinc sulfate is used to precipitate large proteins in serum and an aqueous buffer exchange is then performed to further precipitate the remaining small proteins and zinc ions in sample solution. Samples are vortexed and centrifuged before analysis using mass spectrometry or immunoassay, and only the supernatant is used.

SALIVARY CORTISOL

Most salivary compounds are derived from the blood via passive diffusion or active transport. Cortisol diffuses into the salivary gland independently of the salivary flow rate and reflects the circadian rhythm and early morning peak [30]. Because no bound cortisol exists in saliva, its concentration may be independent of cortisol levels in the blood. Saliva sampling is noninvasive, and therefore, minimizes stress-induced overestimation observed in case of blood sampling; moreover, the inability to assess rapid changes in urinary levels can be compensated [10]. Salivary cortisol has great potential for diagnostic work-up on adrenal disorders; it can facilitate longitudinal studies on the HPA axis following stimulation by ACTH and corticotropin releasing hormone, providing an alternative to the evaluation of free cortisol in human serum [31-33]. The results of a previous study were discouraging [31], but in that study salivary cortisol was compared with total serum cortisol and not free cortisol. The oral hydrocortisone protocol may not be reliable because ingestion of drugs may increase cortisol levels in saliva [32]. Basal and stimulated salivary cortisol concentrations have been correlated with total cortisol concentrations in serum during the short Synacthen test, and an optimal cutoff value of 13.2 nM for stimulated salivary cortisol has been suggested to be diagnostic of adrenal insufficiency [33].

The single salivary test at midnight showed 100% sensitivity (95% class interval [CI], 62.9 to 100) and >96% specificity (95% CI, 92.8 to 100) considering a cut-off >6.1 nM in diagnosing Cushing’s syndrome (CS); therefore, it could be an alternative assay to the 24-hour urinary free cortisol as the first-line outpatient screening test for CS [34,35]. In another night-time salivary cortisol (NSC) assay, high salivary cut-off values of 11.5 to 12.6 nM detected with 100% specificity and 93% sensitivity (CI, 89% to 98%) were diagnostic of CS [36]. However, the NSC assay should be validated at larger scales, and its reference range and cut-off values should be established to confirm suitability in clinical practice. In the overnight 1 mg dexamethasone test, salivary cortisol could be an acceptable biomarker with a cut-off of 50 ng/mL in overt CS; however, identifying mild autonomous cortisol secretion in adrenal incidentalomas with lower cut-off values of 18 to 25 ng/mL is not advisable [37]. In addition, salivary cortisol is not a good indicator of hydrocortisone replacement in Addison’s disease, which is measurable at low concentrations [38].

Different devices for salivary sampling are available, and various absorbent materials, such as swabs, are used to facilitate saliva collection. Sterile cotton dental rolls, such as Salivette (Sarsted, Newton, NC, USA), are the most commonly used; however, non-cotton-based swabs, such as polyester Salivette, have often been recommended because cortisol may bind to cotton wool [39]. Saliva samples are collected by centrifugation of the swab, which is a critical step in obtaining reproducible quantities of salivary analytes. Several factors, including the distribution of mucosal transudate, affect salivary flux and composition, and whole-oral fluid collection may be the most feasible method. However, there are practical challenges in obtaining saliva samples from patients with sepsis and critical illness and it is not always possible to collect adequate volumes. A cotton bud may be an alternative tool for salivary sampling. Salivary cortisol levels could be proportionally calculated with the results obtained using Salivette swab in our preliminary experiment (unpublished).

Storing samples at room temperature is not recommended owing to the onset of bacterial growth in saliva in a few hours. Although cortisol and dehydroepiandrosterone (DHEA) levels are not affected, the salivary concentration of testosterone is changed [40]. Salivary cortisol in intact and centrifuged samples has been stable for 3 months at 4°C and for at least 1 year at –20°C. Long-term storage can be desirable at –80°C, and repeated freezing/thawing (F/T) cycles may not affect its concentration [41,42]. Repeated F/T cycles of saliva are necessary prior to cortisol measurement to break the viscous salivary materials, such as mucin, and disperse all salivary proteins/lipids to efficiently extract steroids [43].

METABOLIC RATIO OF CORTISOL TO CORTISONE

The intracellular level of cortisol can be balanced by synthesis and metabolism, and two isoforms of 11β-HSD regulate the physiological concentrations of biologically inactive cortisone and cortisol [44]. In clinical practice, measurement of free cortisol has been established, but localized levels in target tissues may not be sufficient to indicate metabolic phenotypes altered by cellular and physiological functions [45,46]. Of the type 1 and 2 11β-HSDs, 11β-HSD1 converts inactive cortisone to active cortisol, and the production rate of cortisol to cortisone (F/E) reflects the enzymatic activity of 11β-HSD1 [47]. Increased F/E ratios in serum and plasma have been observed in hypertension, obesity, and metabolic syndrome, implicating the pathogenic effects of 11β-HSD [48-50].

Excessive and deficient production of glucocorticoids is a clinical feature of CS and Addison’s disease, respectively, and metabolic changes in glucocorticoids contribute to the pathophysiology of adrenal defects in addition to hypertension, obesity, and metabolic syndrome [7]. Increased corticoid production is a protective process against stress to meet the physiological needs, and inappropriate responses caused by adrenal insufficiency are common in critical illness with decreased levels of serum cortisol, which may lead to a poor prognostic value [51]. Although the suitability of 11β-HSD as a biomarker of stress response has not been assessed, an increased serum F/E ratio was observed in a heterogeneous population of critical illness, leading to the speculation of an increased hemodynamic stability and preserved tissue perfusion conferring survival potential [52].

Because 11β-HSD2 activity is found in the parotid gland, salivary cortisone can be measured and its concentrations are higher than those of salivary cortisol [53,54]. It may be a better biomarker than salivary cortisol to reflect the serum levels of free cortisol under physiological conditions and after adrenal stimulation and hydrocortisone administration, because it is less affected by CBG; its levels may increase with the use of longstanding glucocorticoids [33-37]. Both cortisol and cortisone in saliva could be reliable biomarkers in febrile patients because their levels are not affected by temperature. Cortisol, cortisone, and F/E ratio in saliva showed good intraindividual stability as single-point and single-day biomarkers, whereas reliability during a 2-week period was not found [55]. In reference to the individual stability of biological specimens, hair biomarkers have no absolute stability, but show moderate results throughout a year. The time-dependent alteration in hair cortisol levels could be attributed to seasonal variation, which may be reduced in summer and elevated in winter [56]. However, further information regarding hair cortisol is not provided because it reflects chronic stress [3].

CONSIDERING FURTHER TECHNICAL DEVELOPMENT

Currently, cortisol is assessed only in the laboratory; therefore, urgent detection of abnormal cortisol levels for diagnosis, treatment, and prevention of hypocortisolism is restricted. It has also been challenging to assess state-related cortisol levels in the blood, saliva, and sweat using ambulatory sensing and wearable devices [57-60]. These novel analytical techniques, based on either affinity to immobilized antibodies or electrical sensing, are promising tools for real-time monitoring and self-testing devices. In principle, immunoassay and mass spectrometry could be complementary, and further development is necessary to establish diagnostic criteria for different types of assays.

Immunoassay

Because cortisol is less soluble in water than most of its metabolites and conjugates with proteins, extraction with an organic solvent prior to the immunoassay may be useful for quantifying low levels of serum cortisol in clinical laboratories [61]. However, the organic extract must be removed because the antibodyimmobilized in immunoassay is denatured and this step requires technical expertise. Immunoassays are susceptible to interference because of the complexities of the antigen–antibody interaction occurring in a complex matrix [62]. The lack of specificity is also derived from different properties of various antibodies used in immunoassays, which leads to variability between different commercially available platforms [63,64]. A multicenter comparative study on cortisol measurement showed a good correlation coefficient between laboratories (CI, 0.97 to 0.99) using a kit from the same manufacturer, whereas a CI of 0.54 to 0.65 was obtained for different immunoassay platforms [65]. In addition, heterophilic antibodies administered through transfusion or auto-antibodies to antigens interfere with the immunoassay [14,66]. A significant variation between immunoassays after corticotropin stimulation was observed because corticotropin stimulates the release of other corticosteroids [66].

While measuring cortisol levels, significant cross-reactivity with endogenous cortisol precursors and metabolites, such as 21-deoxycortisol, 5α-dihydrocortisol, and 5β-dihydrocortisol, as well as with a synthetic corticosteroid, prednisolone, and its 6α-methylated metabolite have been observed (11% to 84.3%); however, tetrahydrocortisol and corticosterone showed low cross-reactivities [67,68]. This cross-reactivity with structurally similar steroids and the low affinity of steroid antibodies result in overestimation in both serum and saliva samples [64,69]. In particular, 21-deoxycortisol can accumulate to high levels in certain disease conditions, such as congenital adrenal hyperplasia with 21-hydroxylase deficiency [70]. A comparison of cortisol quantification using immunoassay and mass spectrometry revealed correlation coefficients ranging from 0.43 to 0.97. These inter-assay variations were reported to complicate the diagnosis in critical illness-related corticosteroid insufficiency [66].

Mass spectrometry

In clinical steroid analysis, mass spectrometry is not performed alone, and chromatography-based separation prior to mass spectrometric detection makes the analysis unique, with high analytical sensitivity and selectivity over immunoassays [71]. Gas chromatographic separation combined with mass spectrometry is an old technique that requires complex steps of sample pretreatment, including deconjugation and vaporization of intact steroid molecules; however, it is still a promising tool in clinical applications [72]. However, LC-MS has been extensively used in clinical laboratories for routine measurement of steroid hormones [73]. In recent, mass spectrometry has been successfully used as a discovery tool to identify novel steroidogenic pathways and to obtain new insights into adrenal pathophysiology. Its development together with machine learning algorithms can facilitate diagnosis [74-76].

Considering the cross-reactivity in immunoassays, improved analytical selectivity with mass spectrometry is currently preferred for trace analysis of cortisol and for profiling of adrenal steroids, including cortisol and its inactive metabolite cortisone [47,53-55,74-76]. In particular, salivary cortisol is present at low concentrations, but one of the endogenous interfering cortisones, which has a cross-reactivity of 0.3% to 31.1% in cortisol immunoassays, is commonly detected [53,54]. In contrast, mass spectrometry can determine accurate quantities and considerably reduces variation across laboratories. Moreover, exogenous corticosteroids can be assessed simultaneously with target endogenous adrenal steroids [74,77], which can provide new insights into the pathophysiology of adrenal steroidogenesis [78].

CONCLUSIONS

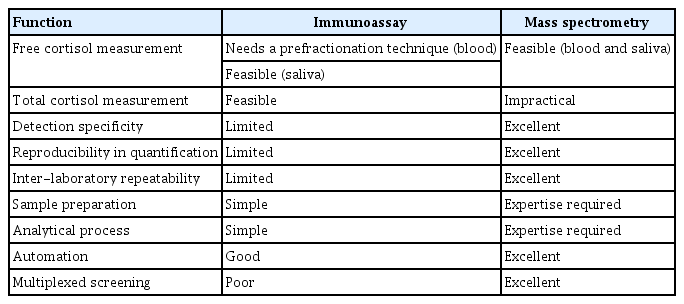

This review discusses the analytical and diagnostic properties of free cortisol. Clinical mass spectrometry is undoubtedly the gold standard for steroid assessment and exhibits improved diagnostic specificity. Mass spectrometry coupled with chromatographic separation will be more reliable for determining multiplexed steroid panels to support diagnosis and monitoring in different clinical settings. However, the simplicity and practical availability of the immunoassay is still in demand, and it should be driven by the assay performance in single-component screening. Immunoassay and mass spectrometry are orthogonal, but should be complementary to address the unmet clinical needs. The limitations of one of these techniques are overcome in the other (Table 1).

In addition to the measurement of hair cortisol levels for evaluating chronic stress, salivary assays have been extensively considered owing to easy sample availability and the ability to reflect the serum levels of biologically active free cortisol after adrenal stimulation and hydrocortisone replacement. However, optimized thresholds for individual specimens and sampling protocol are also required in large-scale population studies for clinical application of these assays under various conditions, such as the type of analytics and sample collection time, in different pathologies. Inter-laboratory accreditation is a perennial challenge for clinical quality control, and the selection of analytical methods should be dictated by clinical needs, not by assay technology.

Acknowledgements

This study was supported by a grant from the Korea Institute of Science and Technology Institutional Program (Project No. 2E3161C), and a grant from the Korea Health Technology R&D Project through the Korea Health Industry Development Institute, funded by the Ministry of Health and Welfare of the Republic of Korea (Project No. HI21C0032).

Notes

CONFLICTS OF INTEREST

No potential conflict of interest relevant to this article was reported.